In partnership with

🌟 Editor's Note: Recapping the AI landscape from 01/06/26 - 01/12/26.

🎇✅ Welcoming Thoughts

Welcome to the 26th edition of NoahonAI.

What’s included: company moves, a weekly winner, AI industry impacts, practical use cases, and more.

Without a doubt, the strongest week across the NVIDIA5 since I started tracking the race.

I went to an AI & Data event in Cleveland Monday night. Lots of concepts we talk about here were being discussed there. Encouraging stuff.

Found a global AI tracker. Here’s a tweet summarizing 2025 NVIDIA5 usage. The full report is even better.

Hard to believe the numbers on Claude are accurate. Seems so low in comparison to those I talk with.

Startup Spotlight this week is a fun one.

Anthropic blocked xAI engineers from using Claude… I don’t think Anthropic engineers have to worry about repercussions (they’re not using Grok).

xAI spent $8B in cash in 2025, mainly for data centers, talent, and software.

Seems like they are now spending heavily on robotics infrastructure. Finally!

Gemini thinking is great. Gemini fast is… not great. More on that in PUC.

Everyone and their parent (company) wants a new data center.

Trump is pushing data centers to cover more of their own energy costs.

New Claude launch is absolutely blowing up tech twitter.

Strong week for OpenAI (growth). Brutal week for OpenAI (competition).

They need cable but for media site article subscriptions.

Almost every NVIDIA5 company this week would’ve won the race last week.

May run a bit long but one of the most enjoyable ones to write.

Let’s get started—plenty to cover this week.

👑 This Week’s Winner: Google // Gemini

Google Wins the Week: Gemini Lands Apple. Google just scored the distribution win everyone’s been chasing: Gemini is headed into Siri and Apple Intelligence, instantly putting Google’s AI in front of billions of users and locking in a massive wedge against OpenAI.

Apple x Google Gemini Deal: Apple will use Gemini to power parts of Siri’s overhaul as well as Apple Intelligence, giving Gemini reach across Apple’s global device base while Apple keeps its privacy policy. The deal had been in the works but it’s now official. What a humongous win for Google.

Universal Commerce Protocol (UCP): Google launched a new framework so AI agents can shop and check out end-to-end across major retailers, pushing Gemini from “answers” into “transactions.” This is flying under the radar a bit but it’s a big deal. Google will likely try and make UCP the standard for AI transactions, like Anthropic did with MCP for AI connections.

Gemini Enterprise for Customers: Google is rolling out Gemini-powered AI agents that handle customer discovery, shopping, and support in one unified enterprise platform. Think of UCP as the blueprint and this as the working operation.

And that’s not all. Alphabet briefly reached a $4T valuation (joining only Apple, NVIDIA, and Microsoft), and Gemini is rolling deeper into Gmail with smarter summaries and inbox help. Finally, in more somber news, Google appears to have settled multiple lawsuits pertaining to chatbot-related suicides.

From Top to Bottom: Open AI, Google Gemini, xAI, Meta AI, Anthropic, NVIDIA.

⬇️ The Rest of the Field

Who’s moving, who’s stalling, and who’s climbing: Ordered by production this week.

🟢 OpenAI // ChatGPT

ChatGPT for Health + Enterprise: Launched a consumer Health mode inside ChatGPT for uploading records/PDFs, summarizing labs, tracking trends, and prepping for doctor visits. Also rolled out an enterprise workspace and API stack aimed at HIPAA deployments for clinicians. Great move! With 40M+ using GPT for health daily this was a natural progression. Smart enterprise addition too. Health data is kept separate from GPT. Waitlist only for now.

Two Key Acquisitions: OpenAI is buying Torch (health records) to strengthen Health data ingestion and acqui-hiring Convogo (AI coaching/assessments) to deepen enterprise workflow products. More strong moves here.

Stargate Texas build: OpenAI + SoftBank put $1B into SB Energy for a 1.2GW Texas AI data center under the Stargate infrastructure plan. I expect to see a lot of these headlines over the next 12-24 months.

🟠 Anthropic // Claude

Claude for Healthcare: Healthcare tool for providers/payers, focused on admin workflows like prior auth, claims, triage, and record summaries. Seems like this is starting as enterprise then rolling out for consumer use. Can’t imagine OpenAI is happy about it.

CoWork launch: New desktop “agent mode” for non-coders that can work across your files and connected apps (early macOS preview), with safety controls for agent actions. There is a ton of hype around this launch. I’m excited to try it out. Only for max users right now.

Allianz partnership: Allianz, one of the world’s largest insurers, is rolling out Claude and Claude Code globally and co-building insurance-specific AI agents with full audit and compliance logging. Huge get for Claude enterprise.

⚪️ NVIDIA

NVIDIA + Eli Lilly: Lilly and NVIDIA are putting up to $1B over five years to open a Bay Area AI co-innovation lab, pairing NVIDIA engineers with Lilly scientists to speed up drug discovery. This is awesome. Very bullish on AI drug discovery / healthcare.

Industrial AI: NVIDIA and Siemens Energy are building an “industrial AI operating system” to embed AI into design and factory operations. Nice. Welcome to the year of ‘Physical AI’.

Autonomous Labs: NVIDIA and Thermo Fisher are baking NVIDIA’s AI into lab instruments so experiments can be planned, run, and analyzed with less manual work. See above comment.

🔵 Meta // Meta AI

Nuclear Deals for Data Centers: Meta signed long-term nuclear agreements (Vistra, TerraPower, Oklo) to lock in up to ~6.6 GW of power by 2035, anchored by its Prometheus AI buildout in New Albany, Ohio. Meta securing energy before buildouts. Opposite of Grok and OpenAI. Smart.

“Meta Compute” Infrastructure: Meta created a top-level group to run AI infrastructure + energy build-out at gigawatt scale, with leadership focused on long-range capacity planning and supplier partnerships. I like this strategy a lot. Can they execute?

DPM Named President: Meta elevated Dina Powell McCormick into a top leadership role to help steer strategy and major capital/infrastructure partnerships as the company ramps AI data-center investment. Former Trump advisor.

🔴 xAI // Grok

$20B Series E Raise: xAI closed a new $20B round to fund GPUs, data centers, and new Grok products, with reports putting valuation around $230B. AI is a capital intensive game right now. Good move.

Grok Image Backlash: After image altering abuse, xAI limited Grok image tools to verified paying users as regulators escalated scrutiny, including a formal Ofcom investigation. Investigate.

New Data Center: xAI announced a $20B+ data-center campus in Southaven, Mississippi to expand U.S. compute for training and inference. Largest private investment in MS history. Cool.

🚑 Impact Industries 🩺

Medical // Clinical Automation

Utah is piloting an autonomous AI system that can legally participate in routine prescription renewals for chronic conditions. Through a partnership with Doctronic inside the state’s AI regulatory sandbox, patients can request refills anytime, while the AI helps determine whether a standard renewal is appropriate. The pilot will track safety outcomes, refill timeliness, clinician workload, patient experience, and cost impact, with results planned for public release. This is just the beginning.

Health // Sleep Predictor

Stanford researchers built SleepFM, a foundation model trained on nearly 600,000 hours of polysomnography data, to read the “language” of sleep. After learning patterns across brain waves, heart signals, breathing, and movement, the model was fine-tuned to forecast future health risks. In linked long-term records, it predicted over 100 conditions including dementia, Parkinson’s, heart disease, and some cancers, suggesting sleep studies could become powerful early warning systems.

💻 Interview (Panel) Highlight: Bloomberg Tech at CES

Interview Outline: This panel marks the shift from digital chatbots to "Physical AI," where intelligence moves into the real world through robotics and manufacturing. The leaders discuss the migration of AI compute from massive data centers directly onto local PCs and "edge" devices.

About the Interviewees: Caroline Hyde is a Bloomberg TV anchor covering global technology and markets. Jim Johnson is an Intel executive focused on PC and edge strategy. Jensen Huang is the co-founder and CEO of NVIDIA. Cristiano Amon is the CEO of Qualcomm. Amnon Shashua is the co-founder and CEO of Mobileye and a pioneer in computer vision and autonomy.

Interesting Quote: ”Industrial robots, like those restocking shelves, are happening as early as 2026.”

My Thoughts: CES is a cool event because you get a look into what may be the new norm a few years down the line. As I mentioned last week, the transformation into Physical AI is going to be a fun one to watch. At the end of the day we may not need “AI toothbrushes”, although with so many people building and innovating, we’re bound to figure out exactly what works, and what current visions will be a staple for the future.

Condensed Interview Highlights — CES 2026

Q: We’re seeing AI in everything at CES this year. What’s the most surprising thing you’ve seen?

Caroline Hyde (Bloomberg): “It’s the sheer variety. We’re seeing AI toothbrushes that tell you if you’ve missed a spot, AI mirrors, and a lot of experimentation around new form factors for the future.”

Q: We’ve been hearing about ‘AI PCs’ for years. What’s the real killer app now?

Jim Johnson (Intel): “For mobile gaming, it’s multi-frame generation. AI effectively quadruples frame rates for ultra-smooth play. We now have the equivalent of 40 data centers’ worth of compute sitting right at the edge.”

Q: Nvidia’s new GPUs consume massive power. How do you justify that footprint?

Jensen Huang (NVIDIA): “Each Ruben GPU uses 240,000 watts, but it’s 10x more energy and cost efficient than the prior generation. We spent 15,000 engineer-years building it. This is the start of a new industrial revolution.”

Q: Why is Qualcomm betting so heavily on robotics?

Cristiano Amon (Qualcomm): “Robotics is fundamentally an Edge AI problem. You can’t put a server in a robot. High performance, low power, sensing—it all has to happen at the edge.”

Q: Why did Mobileye spend $900M acquiring a humanoid robotics company?

Amnon Shashua (Mobileye): “Humanoid robotics is a natural extension of physical AI. From a technology and talent perspective, it’s highly synergistic with what we already do.”

👨💻 Practical Use Case: Switching Models

Difficulty: Basic

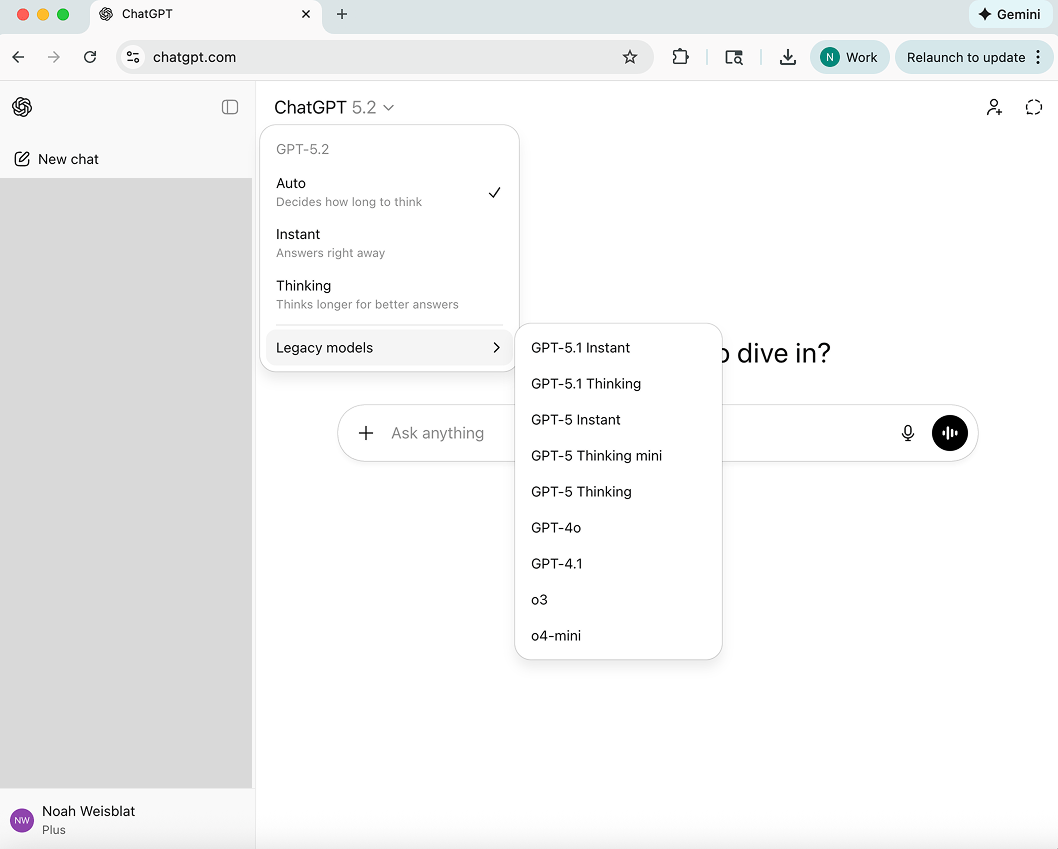

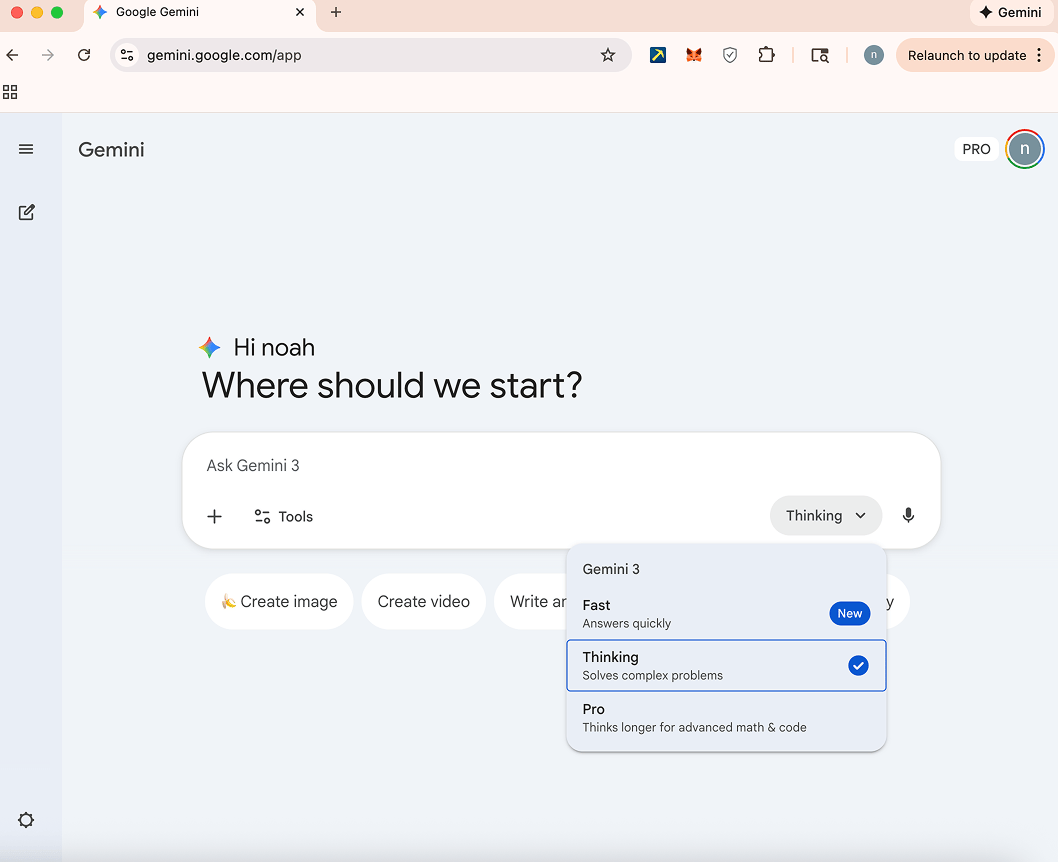

Most AI tools today let you switch between different models inside the same app. This is an easy feature to overlook, but it has a big impact on speed, quality, and cost.

In most LLM’s such as ChatGPT, Claude, and Gemini, you can usually change the model from a dropdown or selector in the interface. For example, switching from a lighter, faster model to a more powerful one when you need to ensure a problem is thought out.

What this means:

Larger models are better at complex tasks, long context, and deep reasoning. In human terms, they’re smarter.

Smaller or faster models respond quicker and are often cheaper to run, but may miss something.

You don’t always need the “best” model for every task, but it helps to understand when to use what.

You’ve probably used these before, but for those who haven’t, here’s what the model selectors look like:

GPT, which started providing access to legacy (older) models after GPT 5 pushback.

Claude (Opus is Excellent)

Gemini (Thinking is Great)

For everyday questions, it doesn’t make a huge difference, but for work related tasks, I highly suggest using a Thinking model, or in Claude’s case, Opus over Sonnet. You’ll hit usage limits faster with the better models, but IMO the difference in quality is worth the upgraded plan.

The real decision time comes when using AI through APIs. When developers build apps on top of models, they choose which model to call behind the scenes. Switching models in an API is often as simple as changing a model name, but the cost difference can be significant.

Usage is charged per 1M tokens, about 750k words, which sounds like a lot, but if you have a ton of people using your app, it adds up quick. OpenAI’s cheapest model is $0.60 per 1M tokens, their most expensive is $600 per 1M tokens. Big difference.

Knowing when to switch models helps you work more efficiently, save money, and get better results. Sometimes the smartest move isn’t a better prompt. It’s just picking the right model.

Learn more below ⬇️

🐬 Startup Spotlight

Earth Species Project

Earth Species Project - Using AI to decode non-human communication.

The Problem: We share the planet with countless intelligent species, but we don’t understand them. From whales to birds to primates, animals exhibit complex communication behaviors that remain largely mysterious to humans.

The Solution: Earth Species Project (ESP) is using machine learning to decode animal communication. By applying techniques from natural language processing, unsupervised learning, and bioacoustics, ESP aims to identify structure and meaning in non-human vocalizations. Their long-term goal is to build tools that enable interspecies understanding and spark new conservation efforts rooted in empathy and science.

The Backstory: Founded in 2017 by Aza Raskin (co-founder of the Center for Humane Technology) and Britt Selvitelle (former Twitter engineer), Earth Species Project brings together researchers from AI, biology, and linguistics. Based in California, the nonprofit is supported by organizations like the Internet Archive and the National Geographic Society, and collaborates with global research labs and conservation groups to build the world’s largest open library of animal communication data.

My Thoughts: This is pretty wild. I have to imagine if we understand what animals are saying at one point in the future it will be a lot of (mate, eat, sleep). At the same time, animals would probably be pretty spooked if they heard us ‘talking’ back to them. Kind of a crazy concept but nonetheless an interesting example of new possibilities with AI tools. Not sure how viable a complete understanding will be but definitely worth following along.

“It’s not likely you’ll lose a job to AI. You’re going to lose the job to somebody who uses AI”

- Jensen Huang | NVIDIA CEO

The speaker at the AI event I was at pulled up the ‘Will Smith eating pasta’ example referenced in Issue 16.

Till Next Time,

Noah on AI