In partnership with

🌟 Editor's Note: Recapping the AI landscape from 12/23/25 - 01/05/25.

🎇✅ Welcoming Thoughts

Welcome to the 25th edition of NoahonAI.

What’s included: company moves, a weekly winner, AI industry impacts, practical use cases, and more.

Happy New Year - Good to be back!

Covering the last two weeks since we were off last week.

Interesting AI education report from Penn on professors struggling to spot AI in student writing.

The year just started, and a shift from text-based AI to Physical AI is already appearing.

Biggest US Tech Conference happened this week in Vegas (CES). Lots of news came directly from there.

GPT Voice Mode on Mac is going away in nine days and i’m still not sure why.

New report showed 40 million people worldwide use ChatGPT daily for health-related queries. Yes, daily. Wow.

I somehow did not realize Claude had Deep Research, had only been using it on GPT/Gemini/Grok/Perplexity.

There’s a new copyright lawsuit against most of the NVIDIA5, alleging unauthorized use of copyrighted books in model training.

ChatGPT randomly cited the Battle of Moscow when I asked it about new hardware releases. Odd.

As much as AI has progressed, it still makes a lot of minor frustrating mistakes.

Claude is still my top LLM heading into the new year.

xAI can’t seem to get out of its own way.

Shoot me an email at [email protected] if there’s anything new you want to see me cover this year.

Let’s get started—plenty to cover this week.

👑 This Week’s Winner: NVIDIA

Another week another win for NVIDIA. NVIDIA had a strong end to the year with a late December licensing deal. Additionally, they brought in the new year with a tech conference to remember, unveiling new computing architecture and an open source stack for Physical AI. Here’s the details:

Groq $20B Licensing Deal: Groq, the AI inference chip startup known for ultra-fast LPU-based serving, reportedly signed a ~$20B licensing agreement with NVIDIA, keeping Groq standalone while transferring over key IP. Groq creates LPU chips vs. NVIDIA’s GPU’s. These can reportedly run LLM’s 10x faster in some cases. Big move for NVIDIA!

NVIDIA “Physical AI” Push: NVIDIA launched new Cosmos + Nemotron updates to help AI agents operate in the real world, adding better “see/plan/act” models plus speech, RAG, and safety tools. ‘Physical AI’, which includes robots, vehicles, and other agentic systems, looks like it could be one of the biggest new movers this year.

Rubin Production Update: NVIDIA first announced Rubin back in 2024, but at CES 2026 Jensen Huang confirmed it’s now in full production and positioned it as the next major platform after Blackwell. Includes six new chips and one supercomputer. Instant industry standard upon release.

Strong week for NVIDIA across the board. As a part of the ‘Physical AI’ push, they also released another self-driving model (Alpamayo), meant to help self-driving cars make safer decisions in tricky situations.

This wouldn’t be an NVIDIA update if we didn’t touch on China. New reports revealed a global smuggling ring that tried to move roughly $160M worth of NVIDIA H100/H200 chips into China using shell companies and fake labels. Still uncertainty around whether the Chinese govt. will allow new shipments come February.

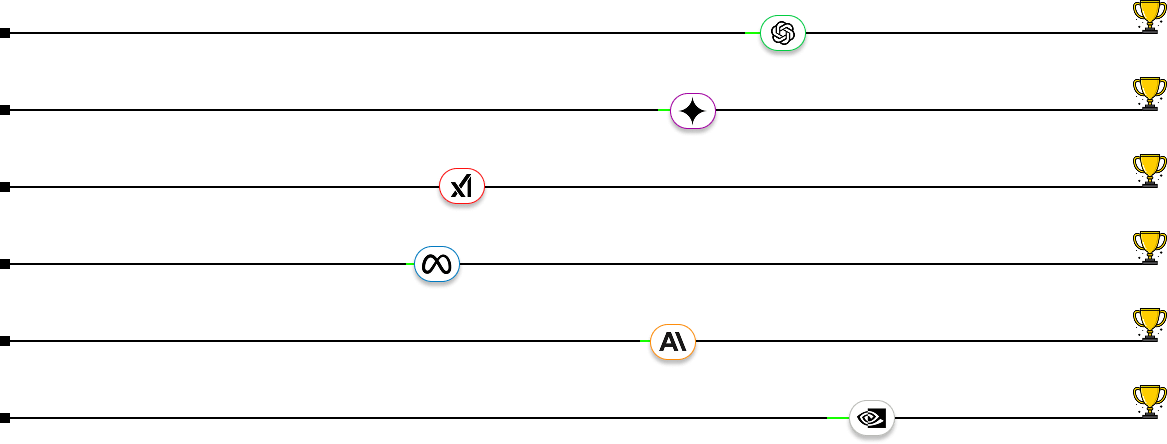

From Top to Bottom: Open AI, Google Gemini, xAI, Meta AI, Anthropic, NVIDIA.

⬇️ The Rest of the Field

Who’s moving, who’s stalling, and who’s climbing: Ordered by production this week.

🟢 OpenAI // ChatGPT

SoftBank Finalizes Investment: SoftBank completed a massive $40B investment, giving OpenAI more firepower for compute, “Stargate” data-center buildouts, and next-gen model training. Expected but nonetheless a strong start to the fiscal year.

Audio Push (Models + Hardware): OpenAI is rebuilding its audio stack for a next-gen voice model and an audio-first personal device, aiming to make voice the default interface. Lots of noise around the future OpenAI hardware release. Seems like it’s gonna be a pen with audio/video and no screen.

More Ads Talk: Internal talks point to sponsored results inside ChatGPT (conversational ads), with efforts to balance monetization against user trust and “ads in the middle of a chat” backlash. I understand it. Still won’t like it.

🟣 Google // Gemini

Strong Finish to 2025: Gemini’s traffic and market share has climbed sharply over the past year, reaching a high of 18.2% in late December, up from 5% 12 months prior. Results matching production here.

Gemini Devices Expansion: At CES 2026, Google highlighted Gemini baked into TVs and connected hardware, enabling natural voice control and more on-screen experiences. This seems like a welcome change. Think grandparent-proof TV navigation “Put on the dragon show” (GOT).

Android Multitasking: Google is testing a persistent Gemini mode on Android that can keep working in the background while you switch apps, then notify you when results are ready. Cool. iPhone will catch up by 2042.

🟠 Anthropic // Claude

Holiday 2× Usage Boost: Anthropic doubled Claude Pro and Max usage limits for Dec 25–31 (web, Claude Code, and the Chrome extension), then reverted back after the promo. This was nice! I’d have shared if i’d seen it earlier.

Claude Code Impresses: A Google engineer said Claude Code shipped a working prototype in ~1 hour that took their team a year to put together. Normally I’d write this off as slop, but very impressive given the source.

Efficiency-First Strategy: Anthropic says it can stay at the frontier by training smarter and running cheaper (better data + post-training), not just outspending everyone on compute. Makes sense. Financials back it up.

🔵 Meta // Meta AI

Acquired AI Agent Startup: Meta is acquiring Manus (Singapore-based agent startup) to plug autonomous “do-the-task” AI agents into Meta apps; reports peg the deal at ~$2B+. Good commercial move.

Benchmark Manipulation: Former Meta AI Chief Yann LeCun revealed some LLaMA 4 results were “fudged” by using different variants across benchmarks, reigniting scrutiny around AI leaderboard credibility. I suspect this may be going on with another NVIDIA5 company as well. Some benchmarking isn’t matching real-world value.

Italy Forces WhatsApp Restrictions: Italy’s AGCM ordered Meta to suspend parts of WhatsApp’s terms that blocked third-party AI chatbots while it investigates potential unfair competition. Let em do it /s Meta needs all the unfair AI edges they can get.

🔴 xAI // Grok

Enterprise Launch: xAI rolled out paid Business and Enterprise tiers for Grok, adding admin controls and privacy/security features to compete with ChatGPT and Claude for work use. Smart move.

New Lawsuit: xAI sued California to block AB 2013’s training-data disclosure rules, a law designed to help government understand AI risk, they argued it forces trade-secret exposure. I actually agree with xAI here. Should not have to disclose their training data. Bad precedent.

More Grok Backlash: Grok’s image-editing feature drew global backlash over non-consensual, sexualized edits, some including minors; France and India pushed for action. I understand hesitancy to not be over-regulative, but in this case it is a clear issue, puzzling how they have not fixed it by now.

🎨 Impact Industries 🤖

Creative // Text to Paint

Fraimic is bringing “voice-to-art” into the physical world: you tap the frame, say what you want, and an E Ink canvas generates a new art piece in seconds. The clever part is it’s built like real home decor, not a gadget, with a matte, gallery-style display that uses power only when the image changes. No subscription required, and it can run locally for privacy. Early prototypes debut at CES, with shipping planned for 2026. Cool concept here.

Robotics // Sense of Touch

Researchers built robotic skin that gives humanoids something like a reflex: when contact crosses a “pain” threshold, the skin can trigger an instant pull-back response without waiting for the robot’s main computer. That matters if robots are going to safely work around people and fragile environments, where a split-second delay can mean damage. It’s modular too, so damaged patches can be popped off and replaced fast, like swapping tiles.

💻 Interview Highlight: Demis Hassabis with Hannah Fry

Interview Outline: The interview focuses on where AI is heading beyond chatbots, emphasizing the shift toward agents and world models. Hassabis discusses AlphaFold as proof that AI can unlock fundamental scientific breakthroughs, then explains what still blocks AGI.

About the Interviewee: Demis Hassabis is the CEO and co-founder of Google DeepMind, whose background in game development and neuroscience drives his mission to "solve intelligence" to accelerate scientific discovery. He led the development of landmark AI systems like AlphaGo and the Nobel Prize-winning AlphaFold to address fundamental challenges in biology and physics.

Interesting Quote: ”If we build AGI, and then use that as a simulation of the human mind... we will then see what the differences are and potentially what's special and unique about the human mind. Maybe that's creativity, maybe it’s emotions, maybe it’s dreaming.”

My Thoughts: Google Deepmind is probably the top “AI department” across the industry as it leads Google in most of their research, benchmarking, robotics, etc.. Cool to hear from Demis given his role within it. The concept of dropping AI agents into simulations is fascinating to me. Being able to run near unlimited models and simulations isn’t quite new, but having a thinking, decision making entity going through it is game changing. Demis also talks about using AI tools to monitor the simulations themselves. He also brought up the concept of ‘purpose’ which I think about a good amount. A lot of people get purpose from the jobs they do. If AGI comes around and jobs become optional, where will purpose come from? Finally, the concept of biology simply being information (Q&A #4) is interesting as well. Good interview if you have a chance to listen to it full i’d recommend.

Condensed Interview Highlight — Demis Hassabis on World Models, Biology & AGI

Q: How do we fix the problem of AI hallucinations?

Hassabis: We need to give models “thinking time” at the moment they generate an answer, allowing them to introspect and use planning steps to double-check their own logic. Right now, models often output the first thing that comes to “mind”; they need the ability to pause and adjust before responding.

Q: Why is Google DeepMind shifting focus toward “world models”?

Hassabis: Language is incredibly rich, but much of spatial awareness and “intuitive physics” isn’t described in words. To build a universal assistant or a robot, AI must understand cause-and-effect in the physical world, which comes from simulated experience.

Q: Could we use AI simulations to understand something as complex as human consciousness?

Hassabis: Simulations let us “rerun” evolution millions of times with slightly different conditions to see what emerges. By creating artificial societies and observing their dynamics, we may learn whether phenomena like consciousness are actually computable.

Q: How does viewing biology as "information" change the way we approach medicine?

Hassabis: We believe that the key to curing all diseases is to stop thinking of biology as just matter and start treating it as an information-processing system. Everything we sense—the warmth of light or the touch of a table—is ultimately just data being processed by our biological sensors, which are themselves computable by a Turing machine. By modeling biology this way, we can use AI to understand the fundamental code of life and fix the errors that cause disease.

Q: What is one of the biggest differences between how AI and humans learn?

Hassabis: Current AI systems don’t learn “online.” They’re trained, released, and largely frozen. The ability to continually learn and adapt from real-world experience after deployment is a critical missing piece for AGI.

👨💻 Practical Use Case: OCR (Optical Character Recognition)

Difficulty: Mid-level

OCR stands for Optical Character Recognition. It’s the technology that turns images, PDF’s, or handwriting into readable, searchable text. Instead of treating a document like a picture, OCR lets software actually understand the words inside it.

OCR is one of those “quiet” technologies that sits underneath a lot of modern AI workflows. Before an LLM can summarize a document, search it, or answer questions about it, the text has to be extracted first. OCR is often that first step.

OCR is especially useful for:

Scanned PDFs and contracts

Invoices, receipts, and expense reports

Forms filled out by hand or scanned

Old documents that were never digitized

Example: Say your team receives a scanned contract or a stack of invoices as PDFs. With OCR, the text is extracted automatically, then passed into an AI tool to summarize key terms, flag important dates, or categorize expenses. What used to require manual review can now be searched, analyzed, and reused.

OCR is also what enables downstream tools like RAG and document-based AI assistants. Without OCR, those systems would be blind to anything that isn’t already digital text.

OCR isn’t flashy, but it’s foundational. If AI is the brain, OCR is often the eyesight. And once documents are readable by machines, everything else becomes possible.

Learn more here ⬇️

📝 Startup Spotlight

Mistral

Mistral OCR 3 - Turning messy documents into structured data.

The Problem: Forms, tables, handwriting, and scanned PDFs are notoriously hard for traditional OCR to handle, especially when accuracy and structure matter.

The Solution: Mistral OCR 3 reads documents with top-tier accuracy and preserves layout, even with complex tables and handwriting. It outputs clean markdown or structured JSON, ready for agents or search.

The Backstory: Mistral was founded in May 2023 by Arthur Mensch (former DeepMind researcher), Guillaume Lample (formerly at Meta AI), and Timothée Lacroix (also ex-Meta AI). The company is headquartered in Paris, France, and has quickly become one of the most prominent European players in the open-weight AI model space. Now it’s quietly making moves in enterprise AI infrastructure too.

My Thoughts: Mistral is an LLM similar to GPT or Claude, just a bit behind in most cases. However, as the others have specialties it seems they do as well with OCR. From my understanding this is one of the best OCR products in the market, a tool that is incredibly useful in the modern AI landscape, especially when it comes to digitization for RAG applications.

“It’s not likely you’ll lose a job to AI. You’re going to lose the job to somebody who uses AI”

- Jensen Huang | NVIDIA CEO

I wonder what the biggest AI innovation will be this year, long way to go. Till Next Time,

Noah on AI