In partnership with

🌟 Editor's Note: Recapping the AI landscape from 10/14/25 - 10/20/25.

🎇✅ Welcoming Thoughts

Welcome to the 15th edition of NoahonAI.

What’s included: company moves, a weekly winner, AI industry impacts, practical use cases, and more.

Bit of a long one today, but lots of good info.

I’ve sent out a bunch of interview requests to folks at NVIDIA5 companies… fingers crossed.

Decided I’ll spotlight an interview I find interesting if I don’t have a guest on.

I’m now officially using Claude more than ChatGPT for any work tasks.

This TPBN show is apparently huge in SF right now, they cover tech moves like sports… not affiliated but find it pretty funny.

Robotics and Medicine continue to be the most interesting impact industries.

Interesting article on OpenAI’s finances; noted ~5% of ChatGPT users are paying for a subscription.

Spent a lot of time testing prompts last week and I can’t say it’s the most exciting practice in the world… but definitely useful.

Seems like xAI and Meta are always in a fight for last place, maybe I’ll drop one and bring in Perplexity.

Let’s get started—plenty to cover this week.

👑 This Week’s Winner: Anthropic // Claude

Claude takes the crown this week as they continue to make huge strides in enterprise AI. Adding capabilities, extending partnerships, and gaining market share. Three major moves:

Expanded Salesforce Partnership: Claude is now available within Agentforce 360 for regulated industries like financial services and healthcare. The deal puts Claude inside Salesforce's "Trust Boundary" for enterprise-grade security and compliance.

Microsoft 365 Integration: Enterprise Claude can now access SharePoint, OneDrive, Outlook, and Teams. You can now select Claude within Microsoft products, including as the model for Copilot's "Researcher agent."

Introduced Claude for Life Sciences: This is a research-focused version of Claude built on Sonnet 4.5, connecting with tools like Benchling, 10x Genomics, and PubMed to help scientists analyze data and draft reports.

Additionally, Anthropic launched Claude Haiku 4.5, a similar option to Sonnet at one third the cost. They also rolled out Claude Skills: custom modules that teach Claude company-specific tasks. They are executing an enterprise adoption plan to a tee. Very impressive stuff.

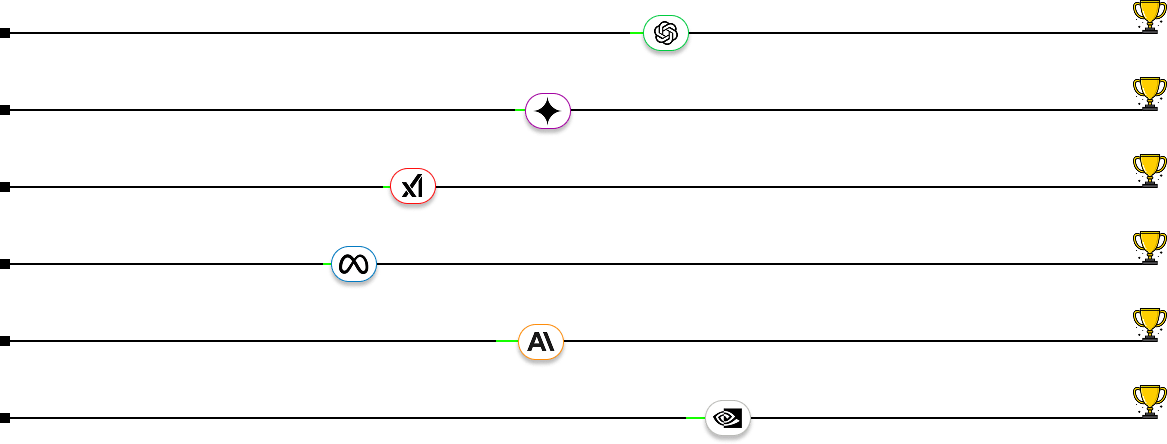

From Top to Bottom: Open AI, Google Gemini, xAI, Meta AI, Anthropic, NVIDIA.

⬇️ The Rest of the Field

Who’s moving, who’s stalling, and who’s climbing: Ordered by production this week.

⚪️ NVIDIA

Massive Data Center Deal: NVIDIA joined BlackRock and Microsoft in a $40B acquisition of Aligned Data Centers, adding 5 GW of capacity across 50–80 sites and expanding into AI infrastructure investment. This makes sense for NVIDIA given the amount of compute they’re supplying.

Sovereign AI: NVIDIA and Oracle are building sovereign AI systems in Abu Dhabi, keeping government data local / in-country. NVIDIA can use this deal to sell to other govt’s on premise of secure local AI.

100% Out of China: NVIDIA CEO Jensen Huang confirmed that they are currently completely withdrawn from the China market. They will exclude it from revenue forecasts until restrictions loosen up. Surprised by this.

🟢 OpenAI // ChatGPT

WalmartGPT: OpenAI and Walmart announced a partnership enabling in-chat product purchases through ChatGPT’s new Instant Checkout feature. Customers can buy Walmart or Sam’s Club items directly via chat. Just the beginning of in-chat capability.

Long-Term Memory: ChatGPT now auto-manages saved memories, prioritizing relevant details and reducing “memory full” errors. The change promises better personalization and context retention over time. Improved memory and retention is incredibly important for AI growth.

Adult Mode: Starting in December, verified adults will be able to access “mature content” and more personalized companion modes in ChatGPT. Following Grok & Meta. Not sure I understand the obsession with this for major LLM’s.

🟣 Google // Gemini

Gemini 3 Inbound: Internal leaks say it could be out as early as this week. Reports point to better memory, faster performance, and richer video/image reasoning. Should be a pretty good upgrade.

Maps API Tool: Google added Maps grounding to the Gemini API, connecting AI answers to real-world data from 250M+ places for more accurate, location-aware responses. This is cool! Excited to see how people build with it.

Venture Into Crypto: Gemini launched a blockchain-specific credit product with Solana. It marks Gemini’s first move into crypto-native consumer finance, bridging traditional credit and DeFi systems.

🔵 Meta // Meta AI

Partnership with Arm Holdings: Meta partnered with Arm to optimize its AI infrastructure across data centers. The goal is to make Meta’s AI models and ranking systems more efficient and reduce reliance on other chip architectures. Primarily CPU not GPU so only slight NVIDIA diversification.

Teen Safety Controls: Meta added new parent tools to block or monitor teen AI chats and launched a “13+” content filter on Instagram to limit exposure to mature material. Good.

El Paso Data Center: Meta broke ground on a $1.5B AI data center in El Paso, Texas, built to scale to 1 GW of power and support its expanding AI operations.

🔴 xAI // Grok

En Route to AGI? Elon said Grok 5 has a 10% chance of achieving AGI. He defined AGI as “anything a human with a computer can do,” estimating that level of capability is three to five years away. More likely 5+ as outlined in the interview below.

X Feed Overhaul: Elon announced that X’s recommendation system will soon be powered entirely by Grok. The system will analyze more than 100 million posts and videos per day to deliver personalized feeds. Lotta compute. Cool if it works.

Coding Challenge: After Andrej Karpathy said Grok 5 still trails GPT-4 and that AGI may be a decade away, Elon challenged him to a coding contest v. Grok, comparing it to “Kasparov vs. Deep Blue.”

🤖 Impact Industries 🏥

Robotics // Disaster Response

In large-scale simulations in Georgia, the University of Maryland’s RoboScout team showcased how AI-powered drones and robot dogs can handle disaster relief. Autonomous drones scanned for survivors and relayed locations to ground robots like Boston Dynamics’ Spot, which used sensors and a language model to assess vitals and communicate with victims. Backed by DARPA’s Triage Challenge, the system could soon help medics prioritize and treat patients faster during mass-casualty events. This is dystopian and very cool.

Medical // Cancer Discovery

Google DeepMind’s Gemma model uncovered new molecular insights that could accelerate cancer drug development. By predicting how proteins interact with potential compounds, the AI revealed previously unknown therapeutic pathways—cutting early research from months to days. The breakthrough shows how open, efficient models like Gemma can generate novel biological knowledge. This is a massive deal. AI generating a previously unknown method of treatment. Pushes past the criticism of AI simply regurgitating known knowledge. Impressive.

💻 Interview Highlight: Andrej Karpathy & Dwarkesh Patel

Interview Outline: Andrej Karpathy believes achieving truly capable AI agents, akin to human employees, will likely take a decade (2025-2035) due to current "cognitive deficits". While existing tools like Claude and Codex are impressive, they lack the tools to handle long-horizon tasks autonomously.

About the Interviewee: Andrej Karpathy is one of the world’s most influential AI researchers and engineers. Formerly Director of AI at Tesla, founding member of OpenAI, and a leading voice on deep learning and computer vision, Karpathy has helped shape some of the most important systems in modern AI.

Interesting Quote: “Humans aren’t great at memorization, and that’s a feature, not a flaw. It forces us to find general patterns rather than memorize specifics. LLMs, on the other hand, excel at memorization they can regurgitate data after a single exposure. But that ability distracts them from abstraction. The goal should be to give AI less memory, so it’s forced to think, reason, and retrieve information, not just recall it.”

My Thoughts: This interview was blowing up on socials this week and was a really interesting watch. Andrej is a very smart guy and if he believes AGI (by his definition) is ~10 years out i’ll take his word for it. I think we’re going to see new models be released and called AGI over the next few years, but the true, full-scale AGI needs more time. Doesn’t mean we won’t see tons of progress in other areas. He’s incredibly bullish on AI but also very realistic on timeline.

💻 Interview Highlight – Andrej Karpathy with Dwarkesh Patel

Dwarkesh Patel: How do you define AGI, and what’s missing today?

Andrej Karpathy: I use the OpenAI definition: a highly autonomous system that outperforms humans at most economically valuable work. Today’s models don’t fit that yet—they can’t learn continuously, use computers reliably, or combine vision, language, and action like humans can. But every part of the stack—data, hardware, optimization—is improving together. There’s no single breakthrough coming, just steady, compounding progress.

Dwarkesh Patel: Why does AI seem great at some things and clueless at others?

Andrej Karpathy: That’s what I call jagged intelligence. Models are trained on uneven internet data, so they overperform on common, well-represented tasks like writing or coding but underperform on math or multi-step logic. It’s not randomness—it reflects what’s in the data. As training quality and diversity improve, that unevenness should smooth out.

Dwarkesh Patel: AI agents look impressive in demos, but why do they fall apart in longer workflows?

Andrej Karpathy: Reliability. It’s easy to make something that works most of the time, but every extra “nine” of reliability—99, 99.9, 99.99 percent—gets exponentially harder. One small failure in a chain can break the whole process. We’ve seen this with self-driving cars and large-scale software. It’s an engineering problem, but it’ll take time to reach real-world dependability.

Dwarkesh Patel: Why can’t large models remember what we tell them between chats?

Andrej Karpathy: Their core weights are frozen after training—they lack continual learning. Some systems fake memory with retrieval tools, but the model itself doesn’t update. The challenge is building a safe, stable way for models to learn from new information without breaking what they already know.

Dwarkesh Patel: You once said AIs are like “people spirits,” not “animals.” What did you mean?

Andrej Karpathy: Humans are embodied—we have instincts, senses, and lived experience. AIs are disembodied; they exist in data and language. They imitate our reasoning without our context. They’re missing grounding in the physical and emotional world. As they gain multimodal perception and interact with reality, they’ll start filling those gaps—but they’ll always think a bit differently from us.

Dwarkesh Patel: What’s a future use of AI you’re genuinely excited about?

Andrej Karpathy: Education. Imagine a perfect tutor that adapts to you in real time, never gets tired, and makes learning genuinely fun. People could learn anything at any level—just for curiosity or self-improvement. It’s not utopian; it’s a technical problem we can actually solve. When learning feels good and personalized, people go much further than they think they can.

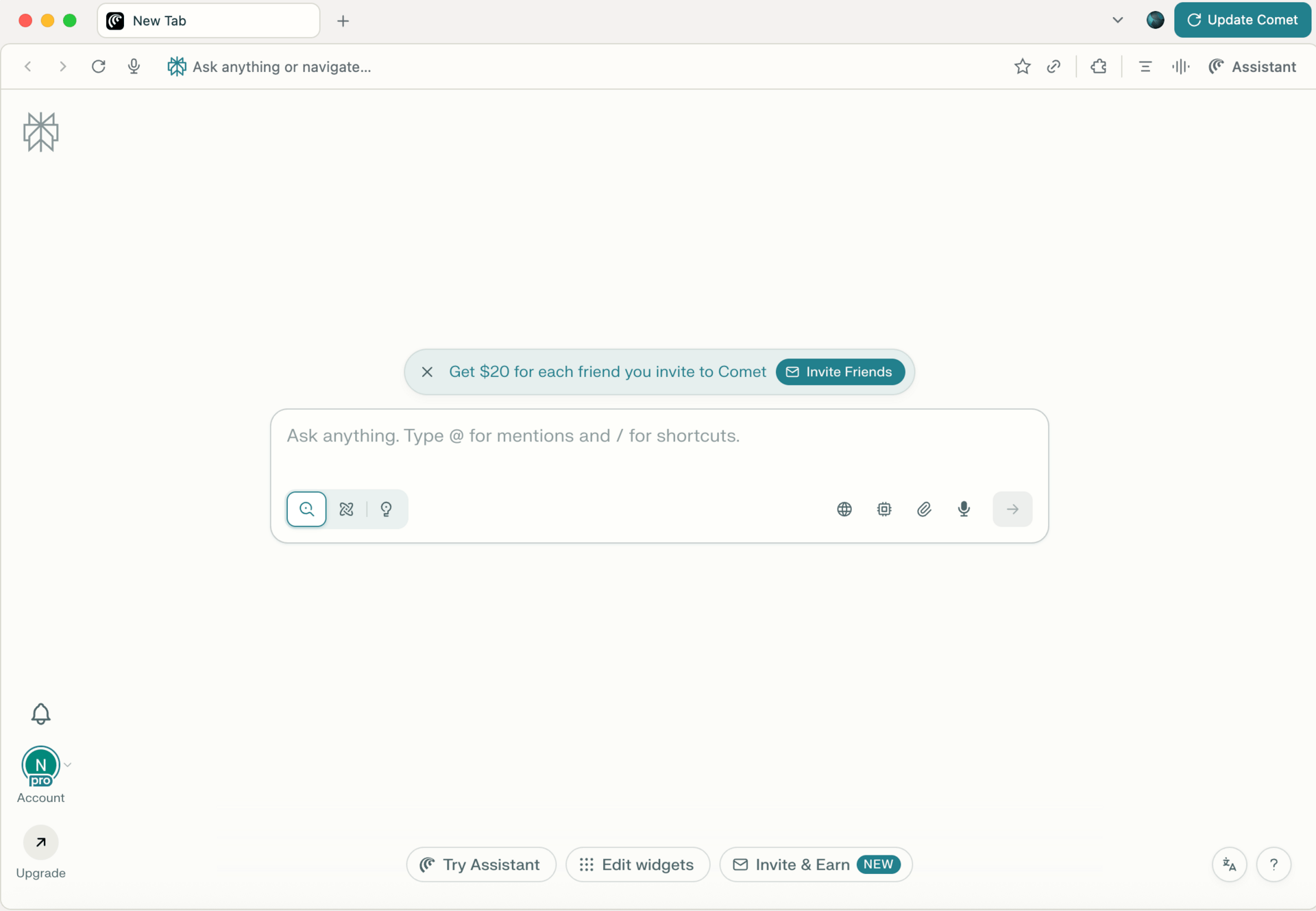

👨💻 Practical Use Case: AI Browsing (Perplexity’s Comet)

Difficulty: Mid-Level

In last week’s interview, we talked about what the next browsing experience might look like as AI evolves. There’s the traditional “clicks-and-tabs” version we’re all used to, and Craig pointed out something much simpler: a future where we chat with emotionally intelligent avatars who browse, read, and act on our behalf.

We’re not quite there yet, but let’s talk about where we are today: the interim stage.

Perplexity, one of the more popular LLMs we don’t usually cover in the Race, recently released an AI browser called Comet. I tested it out this week, and here’s what I found.

Base browser interface (just chat)

The browser does feel more like an AI assistant than a traditional web browser. You type or speak commands: things like “Go to ESPN,” “Summarize this page,” or “Draft an email.” and the system opens the requisite tabs and starts the process.

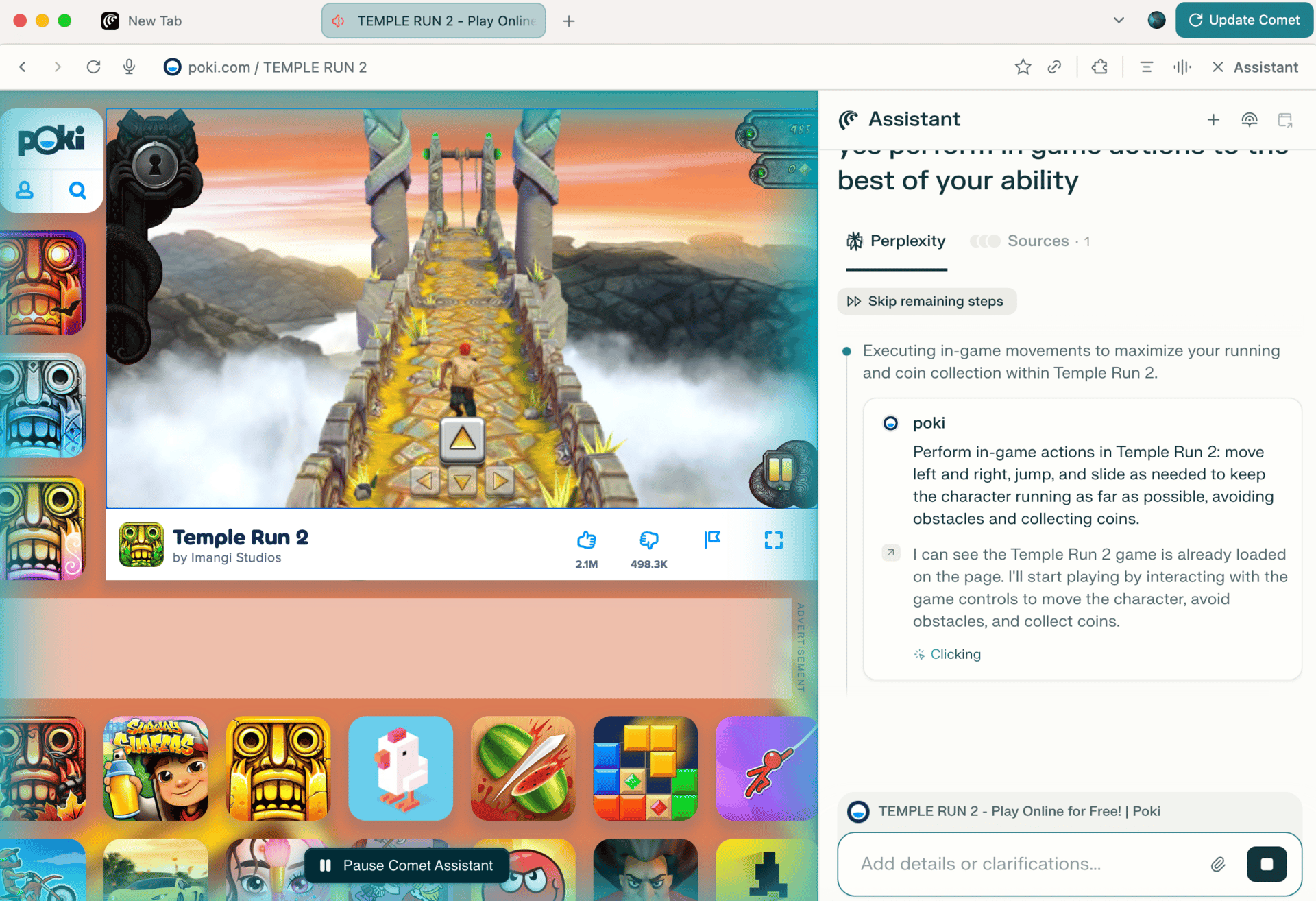

Mobile game test (gameplay takeover)

Inside Comet, I tested the AI assistant that could take control of the page. When I asked it to play a mobile game, it navigated to the site, launched the game, and even pressed start, but couldn’t actually follow along with the in-game controls.

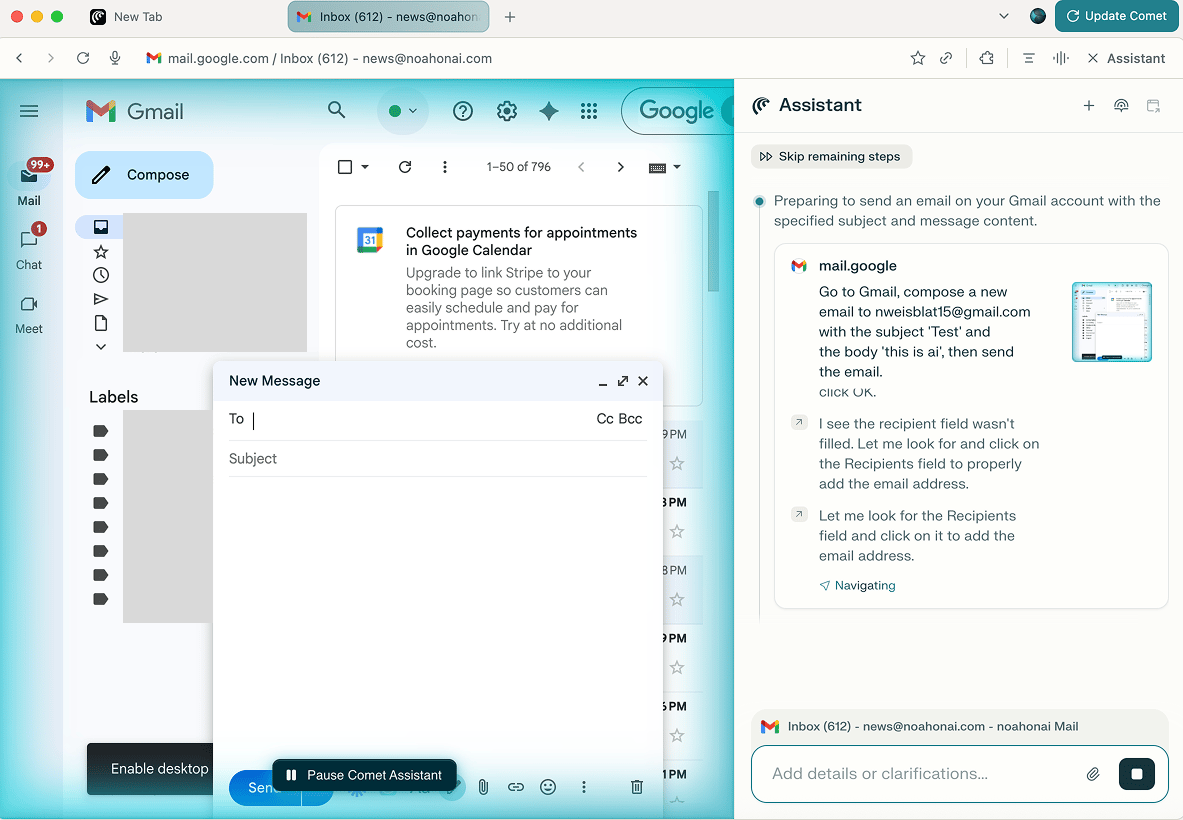

Email navigation test (draft + send)

I also tested the assistant on a more practical task: composing an email. It navigated to Gmail, drafted a full message, and formatted it correctly, but had trouble identifying who to send it to, even though that was in the prompt.

There’s a few other features I want to test out with AI Browsing. Similar to the “chatting with apps” use case from last week, I don’t think it’s fully there yet, but there are definitely more use cases than what I tried out. I found a video that goes a bit more in-depth with Comet if you want to check it out!

👋🏼 Startup Spotlight

HelloMyFriend

HelloMyFriend: AI companions built for how neurodivergent kids learn, connect, and grow.

The Problem: ASD diagnoses have surged—now roughly 1 in 31 U.S. children—while access to effective social-skills support remains limited and expensive, leaving fewer than 40% with adequate intervention. Families and schools need daily, scalable practice that’s affirming—not corrective.

The Solution: HelloMyFriend is an emotionally intelligent, neuro-affirming digital companion that helps neurodivergent kids safely practice real conversations and express what they feel—without pressure to mask or conform. Through lifelike avatars, children engage in short, meaningful dialogues that model empathy and curiosity, helping them build confidence and connection on their own terms. It doesn’t replace human connection—it expands access to it.

The Backstory: Born from the experience of watching a neurodivergent child be misperceived and learn to mask, HelloMyFriend began with a simple desire—to create a place where every child can feel seen, heard, and supported. Determined to give families an affirming, accessible resource, we built a tool that turns daily, gentle practice into confident connection for children and practical support for parents.

My Thoughts: I think AI has a meaningful place in education, and in supporting kids. The HelloMyFriend application is a good example of this. Parents, teachers etc. only have so much time and energy, and offering hyper-specialized tools like this can make a huge impact in young lives.

“It’s not likely you’ll lose a job to AI. You’re going to lose the job to somebody who uses AI”

- Jensen Huang | NVIDIA CEO

Sometimes you forget how much you rely on LLM’s until AWS goes down. Till Next Time,

Noah on AI

VERN Makes AI More Human.

VERN is an Emotion Recognition System (ERS) that detects real human emotions—like anger, fear, joy, and sadness—in every sentence of a conversation. It’s what makes AI truly empathetic, responsive, and trusted.

Here’s how you can use It: